|

Computer programming

From Wikipedia, the free encyclopedia

"Programming" redirects here. For other uses, see Programming (disambiguation).

| Software development process | |

|---|---|

| Activities and steps | |

| Requirements · Specification Architecture · Design Implementation · Testing Deployment · Maintenance | |

| Methodologies | |

| Agile · Cleanroom · Iterative RAD · RUP · Spiral Waterfall · XP · Lean Scrum · V-Model · TDD | |

| Supporting disciplines | |

| Configuration management Documentation Quality assurance (SQA) Project management User experience design | |

| Tools | |

| Compiler · Debugger · Profiler GUI designer · IDE | |

Contents[hide] |

[edit] Overview

| Wikiversity has learning materials about programming |

There is an ongoing debate on the extent to which the writing of programs is an art, a craft or an engineering discipline.[1] In general, good programming is considered to be the measured application of all three, with the goal of producing an efficient and evolvable software solution (the criteria for "efficient" and "evolvable" vary considerably). The discipline differs from many other technical professions in that programmers, in general, do not need to be licensed or pass any standardized (or governmentally regulated) certification tests in order to call themselves "programmers" or even "software engineers." Because the discipline covers many areas, which may or may not include critical applications, it is debatable whether licensing is required for the profession as a whole. In most cases, the discipline is self-governed by the entities which require the programming, and sometimes very strict environments are defined (e.g. United States Air Force use of AdaCore and security clearance). However, representing oneself as a "Professional Software Engineer" without a license from an accredited institution is illegal in many parts of the world.

Another ongoing debate is the extent to which the programming language used in writing computer programs affects the form that the final program takes. This debate is analogous to that surrounding the Sapir–Whorf hypothesis[2] in linguistics and cognitive science, which postulates that a particular spoken language's nature influences the habitual thought of its speakers. Different language patterns yield different patterns of thought. This idea challenges the possibility of representing the world perfectly with language, because it acknowledges that the mechanisms of any language condition the thoughts of its speaker community.

[edit] History

See also: History of programming languages.

Wired control panel for an IBM 402 Accounting Machine.

In the late 1880s, Herman Hollerith invented the recording of data on a medium that could then be read by a machine. Prior uses of machine readable media, above, had been for control, not data. "After some initial trials with paper tape, he settled on punched cards..."[7] To process these punched cards, first known as "Hollerith cards" he invented the tabulator, and the keypunch machines. These three inventions were the foundation of the modern information processing industry. In 1896 he founded the Tabulating Machine Company (which later became the core of IBM). The addition of a control panel (plugboard) to his 1906 Type I Tabulator allowed it to do different jobs without having to be physically rebuilt. By the late 1940s, there were a variety of control panel programmable machines, called unit record equipment, to perform data-processing tasks.

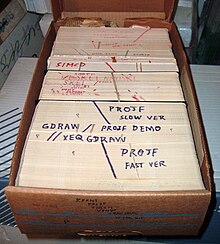

Data and instructions could be stored on external punched cards, which were kept in order and arranged in program decks.

In 1954, FORTRAN was invented; it was the first high level programming language to have a functional implementation, as opposed to just a design on paper.[8][9] (A high-level language is, in very general terms, any programming language that allows the programmer to write programs in terms that are more abstract than assembly language instructions, i.e. at a level of abstraction "higher" than that of an assembly language.) It allowed programmers to specify calculations by entering a formula directly (e.g. Y = X*2 + 5*X + 9). The program text, or source, is converted into machine instructions using a special program called a compiler, which translates the FORTRAN program into machine language. In fact, the name FORTRAN stands for "Formula Translation". Many other languages were developed, including some for commercial programming, such as COBOL. Programs were mostly still entered using punched cards or paper tape. (See computer programming in the punch card era). By the late 1960s, data storage devices and computer terminals became inexpensive enough that programs could be created by typing directly into the computers. Text editors were developed that allowed changes and corrections to be made much more easily than with punched cards. (Usually, an error in punching a card meant that the card had to be discarded and a new one punched to replace it.)

As time has progressed, computers have made giant leaps in the area of processing power. This has brought about newer programming languages that are more abstracted from the underlying hardware. Although these high-level languages usually incur greater overhead, the increase in speed of modern computers has made the use of these languages much more practical than in the past. These increasingly abstracted languages typically are easier to learn and allow the programmer to develop applications much more efficiently and with less source code. However, high-level languages are still impractical for a few programs, such as those where low-level hardware control is necessary or where maximum processing speed is vital.

Throughout the second half of the twentieth century, programming was an attractive career in most developed countries. Some forms of programming have been increasingly subject to offshore outsourcing (importing software and services from other countries, usually at a lower wage), making programming career decisions in developed countries more complicated, while increasing economic opportunities in less developed areas. It is unclear how far this trend will continue and how deeply it will impact programmer wages and opportunities.[citation needed]

[edit] Modern programming

| This section relies largely or entirely upon a single source. Please help improve this article by introducing appropriate citations to additional sources. (August 2010) |

[edit] Quality requirements

Whatever the approach to software development may be, the final program must satisfy some fundamental properties. The following properties are among the most relevant:- Reliability: how often the results of a program are correct. This depends on conceptual correctness of algorithms, and minimization of programming mistakes, such as mistakes in resource management (e.g., buffer overflows and race conditions) and logic errors (such as division by zero or off-by-one errors).

- Robustness: how well a program anticipates problems not due to programmer error. This includes situations such as incorrect, inappropriate or corrupt data, unavailability of needed resources such as memory, operating system services and network connections, and user error.

- Usability: the ergonomics of a program: the ease with which a person can use the program for its intended purpose, or in some cases even unanticipated purposes. Such issues can make or break its success even regardless of other issues. This involves a wide range of textual, graphical and sometimes hardware elements that improve the clarity, intuitiveness, cohesiveness and completeness of a program's user interface.

- Portability: the range of computer hardware and operating system platforms on which the source code of a program can be compiled/interpreted and run. This depends on differences in the programming facilities provided by the different platforms, including hardware and operating system resources, expected behaviour of the hardware and operating system, and availability of platform specific compilers (and sometimes libraries) for the language of the source code.

- Maintainability: the ease with which a program can be modified by its present or future developers in order to make improvements or customizations, fix bugs and security holes, or adapt it to new environments. Good practices during initial development make the difference in this regard. This quality may not be directly apparent to the end user but it can significantly affect the fate of a program over the long term.

- Efficiency/performance: the amount of system resources a program consumes (processor time, memory space, slow devices such as disks, network bandwidth and to some extent even user interaction): the less, the better. This also includes correct disposal of some resources, such as cleaning up temporary files and lack of memory leaks.

[edit] Readability of source code

In computer programming, readability refers to the ease with which a human reader can comprehend the purpose, control flow, and operation of source code. It affects the aspects of quality above, including portability, usability and most importantly maintainability.Readability is important because programmers spend the majority of their time reading, trying to understand and modifying existing source code, rather than writing new source code. Unreadable code often leads to bugs, inefficiencies, and duplicated code. A study[10] found that a few simple readability transformations made code shorter and drastically reduced the time to understand it.

Following a consistent programming style often helps readability. However, readability is more than just programming style. Many factors, having little or nothing to do with the ability of the computer to efficiently compile and execute the code, contribute to readability.[11] Some of these factors include:

- Different indentation styles (whitespace)

- Comments

- Decomposition

- Naming conventions for objects (such as variables, classes, procedures, etc.)

[edit] Algorithmic complexity

The academic field and the engineering practice of computer programming are both largely concerned with discovering and implementing the most efficient algorithms for a given class of problem. For this purpose, algorithms are classified into orders using so-called Big O notation, O(n), which expresses resource use, such as execution time or memory consumption, in terms of the size of an input. Expert programmers are familiar with a variety of well-established algorithms and their respective complexities and use this knowledge to choose algorithms that are best suited to the circumstances.[edit] Methodologies

The first step in most formal software development processes is requirements analysis, followed by testing to determine value modeling, implementation, and failure elimination (debugging). There exist a lot of differing approaches for each of those tasks. One approach popular for requirements analysis is Use Case analysis. Nowadays many programmers use forms of Agile software development where the various stages of formal software development are more integrated together into short cycles that take a few weeks rather than years. There are many approaches to the Software development process.Popular modeling techniques include Object-Oriented Analysis and Design (OOAD) and Model-Driven Architecture (MDA). The Unified Modeling Language (UML) is a notation used for both the OOAD and MDA.

A similar technique used for database design is Entity-Relationship Modeling (ER Modeling).

Implementation techniques include imperative languages (object-oriented or procedural), functional languages, and logic languages.

[edit] Measuring language usage

It is very difficult to determine what are the most popular of modern programming languages. Some languages are very popular for particular kinds of applications (e.g., COBOL is still strong in the corporate data center, often on large mainframes, FORTRAN in engineering applications, scripting languages in web development, and C in embedded applications), while some languages are regularly used to write many different kinds of applications. Also many applications use a mix of several languages in their construction and use.Methods of measuring programming language popularity include: counting the number of job advertisements that mention the language,[12] the number of books teaching the language that are sold (this overestimates the importance of newer languages), and estimates of the number of existing lines of code written in the language (this underestimates the number of users of business languages such as COBOL).

[edit] Debugging

A bug, which was debugged in 1947

Debugging is often done with IDEs like Eclipse, Kdevelop, NetBeans, Code::Blocks, and Visual Studio. Standalone debuggers like gdb are also used, and these often provide less of a visual environment, usually using a command line.

[edit] Programming languages

Main articles: Programming language and List of programming languages

Different programming languages support different styles of programming (called programming paradigms). The choice of language used is subject to many considerations, such as company policy, suitability to task, availability of third-party packages, or individual preference. Ideally, the programming language best suited for the task at hand will be selected. Trade-offs from this ideal involve finding enough programmers who know the language to build a team, the availability of compilers for that language, and the efficiency with which programs written in a given language execute. Languages form an approximate spectrum from "low-level" to "high-level"; "low-level" languages are typically more machine-oriented and faster to execute, whereas "high-level" languages are more abstract and easier to use but execute less quickly. It is usually easier to code in "high-level" languages than in "low-level" ones.Allen Downey, in his book How To Think Like A Computer Scientist, writes:

- The details look different in different languages, but a few basic instructions appear in just about every language:

- input: Get data from the keyboard, a file, or some other device.

- output: Display data on the screen or send data to a file or other device.

- arithmetic: Perform basic arithmetical operations like addition and multiplication.

- conditional execution: Check for certain conditions and execute the appropriate sequence of statements.

- repetition: Perform some action repeatedly, usually with some variation.

[edit] Programmers

Main article: Programmer

See also: Software developer and Software engineer.

Computer programmers are those who write computer software. Their jobs usually involve:[edit] See also

Main article: Outline of computer programming

[edit] See also

- ACCU

- Association for Computing Machinery

- Computer networking

- Computer programming in the punch card era

- Computer science

- Computing

- Hello world program

- List of basic computer programming topics

- List of computer programming topics

- Programming paradigms

- Software engineering

Central processing unit

From Wikipedia, the free encyclopedia

"CPU" redirects here. For other uses, see CPU (disambiguation).

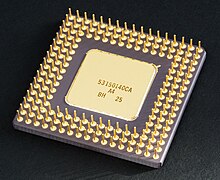

An Intel 80486DX2 CPU from above

On large machines, CPUs require one or more printed circuit boards. On personal computers and small workstations, the CPU is housed in a single chip called a microprocessor. Since the 1970's the microprocessor class of CPUs has almost completely overtaken all other CPU implementations.

The CPU itself is an internal component of the computer. Modern CPUs are small and square and contain multiple metallic connectors or pins on the underside. The CPU is inserted directly into a CPU socket, pin side down, on the motherboard.

Each motherboard will support only a specific type or range of CPU so that one has to check the motherboard manufacturer's specifications before attempting to replace or upgrade a CPU. Modern CPUs also have an attached heat sink and small fan that go directly on top of the CPU to help dissipate heat.

Two typical components of a CPU are the arithmetic logic unit (ALU), which performs arithmetic and logical operations, and the control unit (CU), which extracts instructions from memory and decodes and executes them, calling on the ALU when necessary.

Contents[hide] |

[edit] History

Main article: History of general purpose CPUs

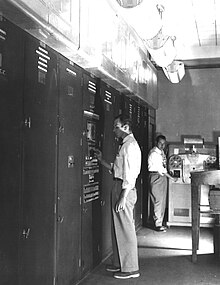

EDVAC, one of the first electronic stored program computers

The idea of a stored-program computer was already present in the design of J. Presper Eckert and John William Mauchly's ENIAC, but was initially omitted so the machine could be finished sooner. On June 30, 1945, before ENIAC was completed, mathematician John von Neumann distributed the paper entitled First Draft of a Report on the EDVAC. It outlined the design of a stored-program computer that would eventually be completed in August 1949.[2] EDVAC was designed to perform a certain number of instructions (or operations) of various types. These instructions could be combined to create useful programs for the EDVAC to run. Significantly, the programs written for EDVAC were stored in high-speed computer memory rather than specified by the physical wiring of the computer. This overcame a severe limitation of ENIAC, which was the considerable time and effort required to reconfigure the computer to perform a new task. With von Neumann's design, the program, or software, that EDVAC ran could be changed simply by changing the contents of the computer's memory.

Early CPUs were custom-designed as a part of a larger, sometimes one-of-a-kind, computer. However, this costly method of designing custom CPUs for a particular application has largely given way to the development of mass-produced processors that are made for one or many purposes. This standardization trend generally began in the era of discrete transistor mainframes and minicomputers and has rapidly accelerated with the popularization of the integrated circuit (IC). The IC has allowed increasingly complex CPUs to be designed and manufactured to tolerances on the order of nanometers. Both the miniaturization and standardization of CPUs have increased the presence of these digital devices in modern life far beyond the limited application of dedicated computing machines. Modern microprocessors appear in everything from automobiles to cell phones and children's toys.[clarification needed]

While von Neumann is most often credited with the design of the stored-program computer because of his design of EDVAC, others before him, such as Konrad Zuse, had suggested and implemented similar ideas. The so-called Harvard architecture of the Harvard Mark I, which was completed before EDVAC, also utilized a stored-program design using punched paper tape rather than electronic memory. The key difference between the von Neumann and Harvard architectures is that the latter separates the storage and treatment of CPU instructions and data, while the former uses the same memory space for both. Most modern CPUs are primarily von Neumann in design, but elements of the Harvard architecture are commonly seen as well.

As a digital device, a CPU is limited to a set of discrete states, and requires some kind of switching elements to differentiate between and change states. Prior to commercial development of the transistor, electrical relays and vacuum tubes (thermionic valves) were commonly used as switching elements. Although these had distinct speed advantages over earlier, purely mechanical designs, they were unreliable for various reasons. For example, building direct current sequential logic circuits out of relays requires additional hardware to cope with the problem of contact bounce. While vacuum tubes do not suffer from contact bounce, they must heat up before becoming fully operational, and they eventually cease to function due to slow contamination of their cathodes that occurs in the course of normal operation. If a tube's vacuum seal leaks, as sometimes happens, cathode contamination is accelerated. Usually, when a tube failed, the CPU would have to be diagnosed to locate the failed component so it could be replaced. Therefore, early electronic (vacuum tube based) computers were generally faster but less reliable than electromechanical (relay based) computers.

Tube computers like EDVAC tended to average eight hours between failures, whereas relay computers like the (slower, but earlier) Harvard Mark I failed very rarely.[1] In the end, tube based CPUs became dominant because the significant speed advantages afforded generally outweighed the reliability problems. Most of these early synchronous CPUs ran at low clock rates compared to modern microelectronic designs (see below for a discussion of clock rate). Clock signal frequencies ranging from 100 kHz to 4 MHz were very common at this time, limited largely by the speed of the switching devices they were built with.

[edit] Overview

[edit] The control unit

The control unit of the CPU contains circuitry that uses electrical signals to direct the entire computer system to carry out stored program instructions. The control unit does not execute program instructions; rather, it directs other parts of the system to do so. The control unit must communicate with both the arithmetic/logic unit and memory.[edit] Discrete transistor and integrated circuit CPUs

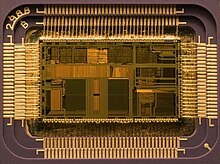

CPU, core memory, and external bus interface of a DEC PDP-8/I. Made of medium-scale integrated circuits

During this period, a method of manufacturing many transistors in a compact space gained popularity. The integrated circuit (IC) allowed a large number of transistors to be manufactured on a single semiconductor-based die, or "chip." At first only very basic non-specialized digital circuits such as NOR gates were miniaturized into ICs. CPUs based upon these "building block" ICs are generally referred to as "small-scale integration" (SSI) devices. SSI ICs, such as the ones used in the Apollo guidance computer, usually contained transistor counts numbering in multiples of ten. To build an entire CPU out of SSI ICs required thousands of individual chips, but still consumed much less space and power than earlier discrete transistor designs. As microelectronic technology advanced, an increasing number of transistors were placed on ICs, thus decreasing the quantity of individual ICs needed for a complete CPU. MSI and LSI (medium- and large-scale integration) ICs increased transistor counts to hundreds, and then thousands.

In 1964 IBM introduced its System/360 computer architecture which was used in a series of computers that could run the same programs with different speed and performance. This was significant at a time when most electronic computers were incompatible with one another, even those made by the same manufacturer. To facilitate this improvement, IBM utilized the concept of a microprogram (often called "microcode"), which still sees widespread usage in modern CPUs.[3] The System/360 architecture was so popular that it dominated the mainframe computer market for decades and left a legacy that is still continued by similar modern computers like the IBM zSeries. In the same year (1964), Digital Equipment Corporation (DEC) introduced another influential computer aimed at the scientific and research markets, the PDP-8. DEC would later introduce the extremely popular PDP-11 line that originally was built with SSI ICs but was eventually implemented with LSI components once these became practical. In stark contrast with its SSI and MSI predecessors, the first LSI implementation of the PDP-11 contained a CPU composed of only four LSI integrated circuits.[4]

Transistor-based computers had several distinct advantages over their predecessors. Aside from facilitating increased reliability and lower power consumption, transistors also allowed CPUs to operate at much higher speeds because of the short switching time of a transistor in comparison to a tube or relay. Thanks to both the increased reliability as well as the dramatically increased speed of the switching elements (which were almost exclusively transistors by this time), CPU clock rates in the tens of megahertz were obtained during this period. Additionally while discrete transistor and IC CPUs were in heavy usage, new high-performance designs like SIMD (Single Instruction Multiple Data) vector processors began to appear. These early experimental designs later gave rise to the era of specialized supercomputers like those made by Cray Inc.

[edit] Microprocessors

| | This section does not cite any references or sources. Please help improve this section by adding citations to reliable sources. Unsourced material may be challenged and removed. (October 2009) |

Main article: Microprocessor

Previous generations of CPUs were implemented as discrete components and numerous small integrated circuits (ICs) on one or more circuit boards. Microprocessors, on the other hand, are CPUs manufactured on a very small number of ICs; usually just one. The overall smaller CPU size as a result of being implemented on a single die means faster switching time because of physical factors like decreased gate parasitic capacitance. This has allowed synchronous microprocessors to have clock rates ranging from tens of megahertz to several gigahertz. Additionally, as the ability to construct exceedingly small transistors on an IC has increased, the complexity and number of transistors in a single CPU has increased dramatically. This widely observed trend is described by Moore's law, which has proven to be a fairly accurate predictor of the growth of CPU (and other IC) complexity to date.

While the complexity, size, construction, and general form of CPUs have changed drastically over the past sixty years, it is notable that the basic design and function has not changed much at all. Almost all common CPUs today can be very accurately described as von Neumann stored-program machines. As the aforementioned Moore's law continues to hold true, concerns have arisen about the limits of integrated circuit transistor technology. Extreme miniaturization of electronic gates is causing the effects of phenomena like electromigration and subthreshold leakage to become much more significant. These newer concerns are among the many factors causing researchers to investigate new methods of computing such as the quantum computer, as well as to expand the usage of parallelism and other methods that extend the usefulness of the classical von Neumann model.

[edit] Operation

The fundamental operation of most CPUs, regardless of the physical form they take, is to execute a sequence of stored instructions called a program. The program is represented by a series of numbers that are kept in some kind of computer memory. There are four steps that nearly all CPUs use in their operation: fetch, decode, execute, and writeback.The first step, fetch, involves retrieving an instruction (which is represented by a number or sequence of numbers) from program memory. The location in program memory is determined by a program counter (PC), which stores a number that identifies the current position in the program. After an instruction is fetched, the PC is incremented by the length of the instruction word in terms of memory units.[5] Often, the instruction to be fetched must be retrieved from relatively slow memory, causing the CPU to stall while waiting for the instruction to be returned. This issue is largely addressed in modern processors by caches and pipeline architectures (see below).

The instruction that the CPU fetches from memory is used to determine what the CPU is to do. In the decode step, the instruction is broken up into parts that have significance to other portions of the CPU. The way in which the numerical instruction value is interpreted is defined by the CPU's instruction set architecture (ISA).[6] Often, one group of numbers in the instruction, called the opcode, indicates which operation to perform. The remaining parts of the number usually provide information required for that instruction, such as operands for an addition operation. Such operands may be given as a constant value (called an immediate value), or as a place to locate a value: a register or a memory address, as determined by some addressing mode. In older designs the portions of the CPU responsible for instruction decoding were unchangeable hardware devices. However, in more abstract and complicated CPUs and ISAs, a microprogram is often used to assist in translating instructions into various configuration signals for the CPU. This microprogram is sometimes rewritable so that it can be modified to change the way the CPU decodes instructions even after it has been manufactured.

After the fetch and decode steps, the execute step is performed. During this step, various portions of the CPU are connected so they can perform the desired operation. If, for instance, an addition operation was requested, the arithmetic logic unit (ALU) will be connected to a set of inputs and a set of outputs. The inputs provide the numbers to be added, and the outputs will contain the final sum. The ALU contains the circuitry to perform simple arithmetic and logical operations on the inputs (like addition and bitwise operations). If the addition operation produces a result too large for the CPU to handle, an arithmetic overflow flag in a flags register may also be set.

The final step, writeback, simply "writes back" the results of the execute step to some form of memory. Very often the results are written to some internal CPU register for quick access by subsequent instructions. In other cases results may be written to slower, but cheaper and larger, main memory. Some types of instructions manipulate the program counter rather than directly produce result data. These are generally called "jumps" and facilitate behavior like loops, conditional program execution (through the use of a conditional jump), and functions in programs.[7] Many instructions will also change the state of digits in a "flags" register. These flags can be used to influence how a program behaves, since they often indicate the outcome of various operations. For example, one type of "compare" instruction considers two values and sets a number in the flags register according to which one is greater. This flag could then be used by a later jump instruction to determine program flow.

After the execution of the instruction and writeback of the resulting data, the entire process repeats, with the next instruction cycle normally fetching the next-in-sequence instruction because of the incremented value in the program counter. If the completed instruction was a jump, the program counter will be modified to contain the address of the instruction that was jumped to, and program execution continues normally. In more complex CPUs than the one described here, multiple instructions can be fetched, decoded, and executed simultaneously. This section describes what is generally referred to as the "classic RISC pipeline", which in fact is quite common among the simple CPUs used in many electronic devices (often called microcontroller). It largely ignores the important role of CPU cache, and therefore the access stage of the pipeline.

[edit] Design and implementation

Main article: CPU design

[edit] Integer range

The way a CPU represents numbers is a design choice that affects the most basic ways in which the device functions. Some early digital computers used an electrical model of the common decimal (base ten) numeral system to represent numbers internally. A few other computers have used more exotic numeral systems like ternary (base three). Nearly all modern CPUs represent numbers in binary form, with each digit being represented by some two-valued physical quantity such as a "high" or "low" voltage.[8]Related to number representation is the size and precision of numbers that a CPU can represent. In the case of a binary CPU, a bit refers to one significant place in the numbers a CPU deals with. The number of bits (or numeral places) a CPU uses to represent numbers is often called "word size", "bit width", "data path width", or "integer precision" when dealing with strictly integer numbers (as opposed to Floating point). This number differs between architectures, and often within different parts of the very same CPU. For example, an 8-bit CPU deals with a range of numbers that can be represented by eight binary digits (each digit having two possible values), that is, 28 or 256 discrete numbers. In effect, integer size sets a hardware limit on the range of integers the software run by the CPU can utilize.[9]

Integer range can also affect the number of locations in memory the CPU can address (locate). For example, if a binary CPU uses 32 bits to represent a memory address, and each memory address represents one octet (8 bits), the maximum quantity of memory that CPU can address is 232 octets, or 4 GiB. This is a very simple view of CPU address space, and many designs use more complex addressing methods like paging in order to locate more memory than their integer range would allow with a flat address space.

Higher levels of integer range require more structures to deal with the additional digits, and therefore more complexity, size, power usage, and general expense. It is not at all uncommon, therefore, to see 4- or 8-bit microcontrollers used in modern applications, even though CPUs with much higher range (such as 16, 32, 64, even 128-bit) are available. The simpler microcontrollers are usually cheaper, use less power, and therefore generate less heat, all of which can be major design considerations for electronic devices. However, in higher-end applications, the benefits afforded by the extra range (most often the additional address space) are more significant and often affect design choices. To gain some of the advantages afforded by both lower and higher bit lengths, many CPUs are designed with different bit widths for different portions of the device. For example, the IBM System/370 used a CPU that was primarily 32 bit, but it used 128-bit precision inside its floating point units to facilitate greater accuracy and range in floating point numbers.[3] Many later CPU designs use similar mixed bit width, especially when the processor is meant for general-purpose usage where a reasonable balance of integer and floating point capability is required.

[edit] Clock rate

Main article: Clock rate

The clock rate is the speed at which a microprocessor executes instructions. Every computer contains an internal clock that regulates the rate at which instructions are executed and synchronizes all the various computer components. The CPU requires a fixed number of clock ticks (or clock cycles) to execute each instruction. The faster the clock, the more instructions the CPU can execute per second.Most CPUs, and indeed most sequential logic devices, are synchronous in nature.[10] That is, they are designed and operate on assumptions about a synchronization signal. This signal, known as a clock signal, usually takes the form of a periodic square wave. By calculating the maximum time that electrical signals can move in various branches of a CPU's many circuits, the designers can select an appropriate period for the clock signal.

This period must be longer than the amount of time it takes for a signal to move, or propagate, in the worst-case scenario. In setting the clock period to a value well above the worst-case propagation delay, it is possible to design the entire CPU and the way it moves data around the "edges" of the rising and falling clock signal. This has the advantage of simplifying the CPU significantly, both from a design perspective and a component-count perspective. However, it also carries the disadvantage that the entire CPU must wait on its slowest elements, even though some portions of it are much faster. This limitation has largely been compensated for by various methods of increasing CPU parallelism. (see below)

However, architectural improvements alone do not solve all of the drawbacks of globally synchronous CPUs. For example, a clock signal is subject to the delays of any other electrical signal. Higher clock rates in increasingly complex CPUs make it more difficult to keep the clock signal in phase (synchronized) throughout the entire unit. This has led many modern CPUs to require multiple identical clock signals to be provided in order to avoid delaying a single signal significantly enough to cause the CPU to malfunction. Another major issue as clock rates increase dramatically is the amount of heat that is dissipated by the CPU. The constantly changing clock causes many components to switch regardless of whether they are being used at that time. In general, a component that is switching uses more energy than an element in a static state. Therefore, as clock rate increases, so does heat dissipation, causing the CPU to require more effective cooling solutions.

One method of dealing with the switching of unneeded components is called clock gating, which involves turning off the clock signal to unneeded components (effectively disabling them). However, this is often regarded as difficult to implement and therefore does not see common usage outside of very low-power designs. One notable late CPU design that uses clock gating is that of the IBM PowerPC-based Xbox 360. It utilizes extensive clock gating in order to reduce the power requirements of the aforementioned videogame console in which it is used. [11] Another method of addressing some of the problems with a global clock signal is the removal of the clock signal altogether. While removing the global clock signal makes the design process considerably more complex in many ways, asynchronous (or clockless) designs carry marked advantages in power consumption and heat dissipation in comparison with similar synchronous designs. While somewhat uncommon, entire asynchronous CPUs have been built without utilizing a global clock signal. Two notable examples of this are the ARM compliant AMULET and the MIPS R3000 compatible MiniMIPS. Rather than totally removing the clock signal, some CPU designs allow certain portions of the device to be asynchronous, such as using asynchronous ALUs in conjunction with superscalar pipelining to achieve some arithmetic performance gains. While it is not altogether clear whether totally asynchronous designs can perform at a comparable or better level than their synchronous counterparts, it is evident that they do at least excel in simpler math operations. This, combined with their excellent power consumption and heat dissipation properties, makes them very suitable for embedded computers.[12]

[edit] Parallelism

Main article: Parallel computing

The description of the basic operation of a CPU offered in the previous section describes the simplest form that a CPU can take. This type of CPU, usually referred to as subscalar, operates on and executes one instruction on one or two pieces of data at a time.This process gives rise to an inherent inefficiency in subscalar CPUs. Since only one instruction is executed at a time, the entire CPU must wait for that instruction to complete before proceeding to the next instruction. As a result, the subscalar CPU gets "hung up" on instructions which take more than one clock cycle to complete execution. Even adding a second execution unit (see below) does not improve performance much; rather than one pathway being hung up, now two pathways are hung up and the number of unused transistors is increased. This design, wherein the CPU's execution resources can operate on only one instruction at a time, can only possibly reach scalar performance (one instruction per clock). However, the performance is nearly always subscalar (less than one instruction per cycle).

Attempts to achieve scalar and better performance have resulted in a variety of design methodologies that cause the CPU to behave less linearly and more in parallel. When referring to parallelism in CPUs, two terms are generally used to classify these design techniques. Instruction level parallelism (ILP) seeks to increase the rate at which instructions are executed within a CPU (that is, to increase the utilization of on-die execution resources), and thread level parallelism (TLP) purposes to increase the number of threads (effectively individual programs) that a CPU can execute simultaneously. Each methodology differs both in the ways in which they are implemented, as well as the relative effectiveness they afford in increasing the CPU's performance for an application.[13]

[edit] Instruction level parallelism

Main articles: Instruction pipelining and Superscalar

Pipelining does, however, introduce the possibility for a situation where the result of the previous operation is needed to complete the next operation; a condition often termed data dependency conflict. To cope with this, additional care must be taken to check for these sorts of conditions and delay a portion of the instruction pipeline if this occurs. Naturally, accomplishing this requires additional circuitry, so pipelined processors are more complex than subscalar ones (though not very significantly so). A pipelined processor can become very nearly scalar, inhibited only by pipeline stalls (an instruction spending more than one clock cycle in a stage).

Most of the difficulty in the design of a superscalar CPU architecture lies in creating an effective dispatcher. The dispatcher needs to be able to quickly and correctly determine whether instructions can be executed in parallel, as well as dispatch them in such a way as to keep as many execution units busy as possible. This requires that the instruction pipeline is filled as often as possible and gives rise to the need in superscalar architectures for significant amounts of CPU cache. It also makes hazard-avoiding techniques like branch prediction, speculative execution, and out-of-order execution crucial to maintaining high levels of performance. By attempting to predict which branch (or path) a conditional instruction will take, the CPU can minimize the number of times that the entire pipeline must wait until a conditional instruction is completed. Speculative execution often provides modest performance increases by executing portions of code that may not be needed after a conditional operation completes. Out-of-order execution somewhat rearranges the order in which instructions are executed to reduce delays due to data dependencies. Also in case of Single Instructions Multiple Data — a case when a lot of data from the same type has to be processed, modern processors can disable parts of the pipeline so that when a single instruction is executed many times, the CPU skips the fetch and decode phases and thus greatly increases performance on certain occasions, especially in highly monotonous program engines such as video creation software and photo processing.

In the case where a portion of the CPU is superscalar and part is not, the part which is not suffers a performance penalty due to scheduling stalls. The Intel P5 Pentium had two superscalar ALUs which could accept one instruction per clock each, but its FPU could not accept one instruction per clock. Thus the P5 was integer superscalar but not floating point superscalar. Intel's successor to the P5 architecture, P6, added superscalar capabilities to its floating point features, and therefore afforded a significant increase in floating point instruction performance.

Both simple pipelining and superscalar design increase a CPU's ILP by allowing a single processor to complete execution of instructions at rates surpassing one instruction per cycle (IPC).[15] Most modern CPU designs are at least somewhat superscalar, and nearly all general purpose CPUs designed in the last decade are superscalar. In later years some of the emphasis in designing high-ILP computers has been moved out of the CPU's hardware and into its software interface, or ISA. The strategy of the very long instruction word (VLIW) causes some ILP to become implied directly by the software, reducing the amount of work the CPU must perform to boost ILP and thereby reducing the design's complexity.

[edit] Thread-level parallelism

Another strategy of achieving performance is to execute multiple programs or threads in parallel. This area of research is known as parallel computing. In Flynn's taxonomy, this strategy is known as Multiple Instructions-Multiple Data or MIMD.One technology used for this purpose was multiprocessing (MP). The initial flavor of this technology is known as symmetric multiprocessing (SMP), where a small number of CPUs share a coherent view of their memory system. In this scheme, each CPU has additional hardware to maintain a constantly up-to-date view of memory. By avoiding stale views of memory, the CPUs can cooperate on the same program and programs can migrate from one CPU to another. To increase the number of cooperating CPUs beyond a handful, schemes such as non-uniform memory access (NUMA) and directory-based coherence protocols were introduced in the 1990s. SMP systems are limited to a small number of CPUs while NUMA systems have been built with thousands of processors. Initially, multiprocessing was built using multiple discrete CPUs and boards to implement the interconnect between the processors. When the processors and their interconnect are all implemented on a single silicon chip, the technology is known as a multi-core microprocessor.

It was later recognized that finer-grain parallelism existed with a single program. A single program might have several threads (or functions) that could be executed separately or in parallel. Some of the earliest examples of this technology implemented input/output processing such as direct memory access as a separate thread from the computation thread. A more general approach to this technology was introduced in the 1970s when systems were designed to run multiple computation threads in parallel. This technology is known as multi-threading (MT). This approach is considered more cost-effective than multiprocessing, as only a small number of components within a CPU is replicated in order to support MT as opposed to the entire CPU in the case of MP. In MT, the execution units and the memory system including the caches are shared among multiple threads. The downside of MT is that the hardware support for multithreading is more visible to software than that of MP and thus supervisor software like operating systems have to undergo larger changes to support MT. One type of MT that was implemented is known as block multithreading, where one thread is executed until it is stalled waiting for data to return from external memory. In this scheme, the CPU would then quickly switch to another thread which is ready to run, the switch often done in one CPU clock cycle, such as the UltraSPARC Technology. Another type of MT is known as simultaneous multithreading, where instructions of multiple threads are executed in parallel within one CPU clock cycle.

For several decades from the 1970s to early 2000s, the focus in designing high performance general purpose CPUs was largely on achieving high ILP through technologies such as pipelining, caches, superscalar execution, out-of-order execution, etc. This trend culminated in large, power-hungry CPUs such as the Intel Pentium 4. By the early 2000s, CPU designers were thwarted from achieving higher performance from ILP techniques due to the growing disparity between CPU operating frequencies and main memory operating frequencies as well as escalating CPU power dissipation owing to more esoteric ILP techniques.

CPU designers then borrowed ideas from commercial computing markets such as transaction processing, where the aggregate performance of multiple programs, also known as throughput computing, was more important than the performance of a single thread or program.

This reversal of emphasis is evidenced by the proliferation of dual and multiple core CMP (chip-level multiprocessing) designs and notably, Intel's newer designs resembling its less superscalar P6 architecture. Late designs in several processor families exhibit CMP, including the x86-64 Opteron and Athlon 64 X2, the SPARC UltraSPARC T1, IBM POWER4 and POWER5, as well as several video game console CPUs like the Xbox 360's triple-core PowerPC design, and the PS3's 7-core Cell microprocessor.

[edit] Data parallelism

Main articles: Vector processor and SIMD

A less common but increasingly important paradigm of CPUs (and indeed, computing in general) deals with data parallelism. The processors discussed earlier are all referred to as some type of scalar device.[16] As the name implies, vector processors deal with multiple pieces of data in the context of one instruction. This contrasts with scalar processors, which deal with one piece of data for every instruction. Using Flynn's taxonomy, these two schemes of dealing with data are generally referred to as SIMD (single instruction, multiple data) and SISD (single instruction, single data), respectively. The great utility in creating CPUs that deal with vectors of data lies in optimizing tasks that tend to require the same operation (for example, a sum or a dot product) to be performed on a large set of data. Some classic examples of these types of tasks are multimedia applications (images, video, and sound), as well as many types of scientific and engineering tasks. Whereas a scalar CPU must complete the entire process of fetching, decoding, and executing each instruction and value in a set of data, a vector CPU can perform a single operation on a comparatively large set of data with one instruction. Of course, this is only possible when the application tends to require many steps which apply one operation to a large set of data.Most early vector CPUs, such as the Cray-1, were associated almost exclusively with scientific research and cryptography applications. However, as multimedia has largely shifted to digital media, the need for some form of SIMD in general-purpose CPUs has become significant. Shortly after inclusion of floating point execution units started to become commonplace in general-purpose processors, specifications for and implementations of SIMD execution units also began to appear for general-purpose CPUs. Some of these early SIMD specifications like HP's Multimedia Acceleration eXtensions (MAX) and Intel's MMX were integer-only. This proved to be a significant impediment for some software developers, since many of the applications that benefit from SIMD primarily deal with floating point numbers. Progressively, these early designs were refined and remade into some of the common, modern SIMD specifications, which are usually associated with one ISA. Some notable modern examples are Intel's SSE and the PowerPC-related AltiVec (also known as VMX).[17]

[edit] Performance

The performance or speed of a processor depends on the clock rate (generally given in multiples of hertz) and the instructions per clock (IPC), which together are the factors for the instructions per second (IPS) that the CPU can perform.[18] Many reported IPS values have represented "peak" execution rates on artificial instruction sequences with few branches, whereas realistic workloads consist of a mix of instructions and applications, some of which take longer to execute than others. The performance of the memory hierarchy also greatly affects processor performance, an issue barely considered in MIPS calculations. Because of these problems, various standardized tests such as SPECint have been developed to attempt to measure the real effective performance (often called "benchmark" for this purpose) in commonly used applications.Processing performance of computers is increased by using multi-core processors, which essentially is plugging two or more individual processors (called cores in this sense) into one integrated circuit.[19] Ideally, a dual core processor would be nearly twice as powerful as a single core processor. In practice, however, the performance gain is far less, only about fifty percent,[19] due to imperfect software algorithms and implementation.

Motherboard

From Wikipedia, the free encyclopedia

In personal computers, a motherboard is the central printed circuit board (PCB) in many modern computers and holds many of the crucial components of the system, providing connectors for other peripherals. The motherboard is sometimes alternatively known as the mainboard, system board, or, on Apple computers, the logic board.[1] It is also sometimes casually shortened to mobo.[2]

Motherboard for a Acer desktop personal computer, showing the typical components and interfaces that are found on a motherboard. This model was made by Foxconn in 2008, and follows the ATX layout (known as the "form factor") usually employed for desktop computers. It is designed to work with AMD's Athlon 64 processor.

Contents[hide] |

[edit] History

Prior to the advent of the microprocessor, a computer was usually built in a card-cage case or mainframe with components connected by a backplane consisting of a set of slots themselves connected with wires; in very old designs the wires were discrete connections between card connector pins, but printed circuit boards soon became the standard practice. The Central Processing Unit, memory and peripherals were housed on individual printed circuit boards which plugged into the backplane.During the late 1980s and 1990s, it became economical to move an increasing number of peripheral functions onto the motherboard (see below). In the late 1980s, motherboards began to include single ICs (called Super I/O chips) capable of supporting a set of low-speed peripherals: keyboard, mouse, floppy disk drive, serial ports, and parallel ports. As of the late 1990s, many personal computer motherboards supported a full range of audio, video, storage, and networking functions without the need for any expansion cards at all; higher-end systems for 3D gaming and computer graphics typically retained only the graphics card as a separate component.

The early pioneers of motherboard manufacturing were Micronics, Mylex, AMI, DTK, Hauppauge, Orchid Technology, Elitegroup, DFI, and a number of Taiwan-based manufacturers.

The most popular computers such as the Apple II and IBM PC had published schematic diagrams and other documentation which permitted rapid reverse-engineering and third-party replacement motherboards. Usually intended for building new computers compatible with the exemplars, many motherboards offered additional performance or other features and were used to upgrade the manufacturer's original equipment

The term mainboard is applied to devices with a single board and no additional expansions or capability. In modern terms this would include embedded systems and controlling boards in televisions, washing machines, etc. A motherboard specifically refers to a printed circuit board with expansion capability.

[edit] Overview

A motherboard, like a backplane, provides the electrical connections by which the other components of the system communicate, but unlike a backplane, it also connects the central processing unit and hosts other subsystems and devices.A typical desktop computer has its microprocessor, main memory, and other essential components connected to the motherboard. Other components such as external storage, controllers for video display and sound, and peripheral devices may be attached to the motherboard as plug-in cards or via cables, although in modern computers it is increasingly common to integrate some of these peripherals into the motherboard itself.

An important component of a motherboard is the microprocessor's supporting chipset, which provides the supporting interfaces between the CPU and the various buses and external components. This chipset determines, to an extent, the features and capabilities of the motherboard.

Modern motherboards include, at a minimum:

- sockets (or slots) in which one or more microprocessors may be installed[3]

- slots into which the system's main memory is to be installed (typically in the form of DIMM modules containing DRAM chips)

- a chipset which forms an interface between the CPU's front-side bus, main memory, and peripheral buses

- non-volatile memory chips (usually Flash ROM in modern motherboards) containing the system's firmware or BIOS

- a clock generator which produces the system clock signal to synchronize the various components

- slots for expansion cards (these interface to the system via the buses supported by the chipset)

- power connectors, which receive electrical power from the computer power supply and distribute it to the CPU, chipset, main memory, and expansion cards.[4]

Given the high thermal design power of high-speed computer CPUs and components, modern motherboards nearly always include heat sinks and mounting points for fans to dissipate excess heat.

[edit] CPU sockets

Main article: CPU socket

A CPU socket or slot is an electrical component that attaches to a printed circuit board (PCB) and is designed to house a CPU (also called a microprocessor). It is a special type of integrated circuit socket designed for very high pin counts. A CPU socket provides many functions, including a physical structure to support the CPU, support for a heat sink, facilitating replacement (as well as reducing cost), and most importantly, forming an electrical interface both with the CPU and the PCB. CPU sockets can most often be found in most desktop and server computers (laptops typically use surface mount CPUs), particularly those based on the Intel x86 architecture on the motherboard. A CPU socket type and motherboard chipset must support the CPU series and speed.[edit] Integrated peripherals

For example, the ECS RS485M-M,[6] a typical modern budget motherboard for computers based on AMD processors, has on-board support for a very large range of peripherals:

- disk controllers for a floppy disk drive, up to 2 PATA drives, and up to 6 SATA drives (including RAID 0/1 support)

- integrated graphics controller supporting 2D and 3D graphics, with VGA and TV output

- integrated sound card supporting 8-channel (7.1) audio and S/PDIF output

- Fast Ethernet network controller for 10/100 Mbit networking

- USB 2.0 controller supporting up to 12 USB ports

- IrDA controller for infrared data communication (e.g. with an IrDA-enabled cellular phone or printer)

- temperature, voltage, and fan-speed sensors that allow software to monitor the health of computer components

[edit] Peripheral card slots

A typical motherboard of 2009 will have a different number of connections depending on its standard.A standard ATX motherboard will typically have one PCI-E 16x connection for a graphics card, two conventional PCI slots for various expansion cards, and one PCI-E 1x (which will eventually supersede PCI). A standard EATX motherboard will have one PCI-E 16x connection for a graphics card, and a varying number of PCI and PCI-E 1x slots. It can sometimes also have a PCI-E 4x slot. (This varies between brands and models.)

Some motherboards have two PCI-E 16x slots, to allow more than 2 monitors without special hardware, or use a special graphics technology called SLI (for Nvidia) and Crossfire (for ATI). These allow 2 graphics cards to be linked together, to allow better performance in intensive graphical computing tasks, such as gaming and video editing.

As of 2007, virtually all motherboards come with at least four USB ports on the rear, with at least 2 connections on the board internally for wiring additional front ports that may be built into the computer's case. Ethernet is also included. This is a standard networking cable for connecting the computer to a network or a modem. A sound chip is always included on the motherboard, to allow sound output without the need for any extra components. This allows computers to be far more multimedia-based than before. Some motherboards contain video outputs on the back panel for integrated graphics solutions (either embedded in the motherboard, or combined with the microprocessor, such as the Intel HD Graphics). A separate card may still be used.

[edit] Temperature and reliability

Main article: Computer cooling

Motherboards are generally air cooled with heat sinks often mounted on larger chips, such as the Northbridge, in modern motherboards. If the motherboard is not cooled properly, it can cause the computer to crash. Passive cooling, or a single fan mounted on the power supply, was sufficient for many desktop computer CPUs until the late 1990s; since then, most have required CPU fans mounted on their heat sinks, due to rising clock speeds and power consumption. Most motherboards have connectors for additional case fans as well. Newer motherboards have integrated temperature sensors to detect motherboard and CPU temperatures, and controllable fan connectors which the BIOS or operating system can use to regulate fan speed. Some computers (which typically have high-performance microprocessors, large amounts of RAM, and high-performance video cards) use a water-cooling system instead of many fans.Some small form factor computers and home theater PCs designed for quiet and energy-efficient operation boast fan-less designs. This typically requires the use of a low-power CPU, as well as careful layout of the motherboard and other components to allow for heat sink placement.

A 2003 study[7] found that some spurious computer crashes and general reliability issues, ranging from screen image distortions to I/O read/write errors, can be attributed not to software or peripheral hardware but to aging capacitors on PC motherboards. Ultimately this was shown to be the result of a faulty electrolyte formulation.[8]

- For more information on premature capacitor failure on PC motherboards, see capacitor plague.

[edit] Form factor

Main article: Comparison of computer form factors

Motherboards are produced in a variety of sizes and shapes called computer form factor, some of which are specific to individual computer manufacturers. However, the motherboards used in IBM-compatible to fit various case sizes. As of 2007[update], most desktop computer motherboards use one of these[which?] standard form factors—even those found in Macintosh and Sun computers, which have not traditionally been built from commodity components. The current desktop PC form factor of choice is ATX. A case's motherboard and PSU form factor must all match, though some smaller form factor motherboards of the same family will fit larger cases. For example, an ATX case will usually accommodate a microATX motherboard.Laptop computers generally use highly integrated, miniaturized and customized motherboards. This is one of the reasons that laptop computers are difficult to upgrade and expensive to repair. Often the failure of one laptop component requires the replacement of the entire motherboard, which is usually more expensive than a desktop motherboard due to the large number of integrated components.

[edit] Bootstrapping using the BIOS

Main article: booting

Motherboards contain some non-volatile memory to initialize the system and load an operating system from some external peripheral device. Microcomputers such as the Apple II and IBM PC used ROM chips, mounted in sockets on the motherboard. At power-up, the central processor would load its program counter with the address of the boot ROM and start executing ROM instructions, displaying system information on the screen and running memory checks, which would in turn start loading memory from an external or peripheral device (disk drive). If none is available, then the computer can perform tasks from other memory stores or display an error message, depending on the model and design of the computer and version of the BIOS.Most modern motherboard designs use a BIOS, stored in an EEPROM chip soldered or socketed to the motherboard, to bootstrap an operating system. When power is first applied to the motherboard, the BIOS firmware tests and configures memory, circuitry, and peripherals. This Power-On Self Test (POST) may include testing some of the following devices:

- video adapter

- cards inserted into slots, such as conventional PCI

- floppy drive

- thermistors, voltages, and fan speeds for hardware monitoring

- CMOS used to store BIOS setup configuration

- keyboard and mouse

- network controller

- optical drives: CD-ROM or DVD-ROM

- SCSI hard drive

- IDE, EIDE, or SATA hard disk

- security devices, such as a fingerprint reader or the state of a latch switch to detect intrusion

- USB devices, such as a memory storage device

[edit] See also

- Accelerated Graphics Port (AGP)

- Backplane

- BIOS

- Central Processing Unit

- Chipset

- Computer case

- Conventional PCI

- Daughterboard

- Front-side bus

- Industry Standard Architecture (ISA)

- List of motherboard manufacturers

- Offboard

- Overclocking

- PCI Express

- Single-board computer

[edit] References

- ^ Paul Miller. "Apple sneaks new logic board into whining MacBook Pros" (2006). Engadget. http://www.engadget.com/2006/07/08/apple-sneaks-new-logic-board-into-whining-macbook-pros/. Retrieved 2008-10-23.

- ^ "mobo". Webopedia. http://www.webopedia.com/TERM/M/mobo.html. Retrieved 2008-10-23.

- ^ In the case of CPUs in BGA packages, such as the VIA C3, the CPU is directly soldered to the motherboard.

- ^ As of 2007[update], some graphics cards (e.g. GeForce 8 and Radeon R600) require more power than the motherboard can provide, and thus dedicated connectors have been introduced to attach them directly to the power supply. (Note that most disk drives also connect to the power supply via dedicated connectors.)

- ^ "Golden Oldies: 1993 mainboards". http://redhill.net.au/b/b-93.html. Retrieved 2007-06-27.

- ^ "RS485M-M (V1.0)". http://www.ecs.com.tw/ECSWebSite/Products/ProductsDetail.aspx?DetailID=654&CategoryID=1&DetailName=Feature&MenuID=46&LanID=9. Retrieved 2007-06-27.

- ^ c't Magazine, vol. 21, pp. 216-221. 2003.

- ^ Yu-Tzu Chiu, Samuel K. Moore "Faults & Failures: Leaking Capacitors Muck up Motherboards" (2003-02-19) IEEE Spectrum accessed 2008-03-10

- ^ See the capacitor lifetime formula at [1].

[edit] External links

| Wikimedia Commons has media related to: Computer motherboards |

- List of motherboard manufacturers and links to BIOS updates

- What is a motherboard?

- The Making of a Motherboard: ECS Factory Tour

- The Making of a Motherboard: Gigabyte Factory Tour

- Motherboards at the Open Directory Project

- Front Panel I/O Connectivity Design Guide - v1.3 (pdf file) (February 2005)

Mouse (computing)

From Wikipedia, the free encyclopedia

Contents[hide] |

[edit] Naming

The first known publication of the term "mouse" as a pointing device is in Bill English's 1965 publication "Computer-Aided Display Control".[1]The online Oxford Dictionaries entry for mouse states the plural for the small rodent is mice, while the plural for the small computer connected device is either mice or mouses. However, in the usage section of the entry it states that the more common plural is mice, and the first recorded use of the term in the plural (1984) is mice as well.[2] The fourth edition of The American Heritage Dictionary of the English Language endorses both computer mice and computer mouses as correct plural forms for computer mouse. Some authors of technical documents may prefer either mouse devices or the more generic pointing devices. The plural mouses treats mouse as a "headless noun".

[edit] Early mice

Early mouse patents. From left to right: Opposing track wheels by Engelbart, Nov. 1970, U.S. Patent 3,541,541. Ball and wheel by Rider, Sept. 1974, U.S. Patent 3,835,464. Ball and two rollers with spring by Opocensky, Oct. 1976, U.S. Patent 3,987,685

Independently, Douglas Engelbart at the Stanford Research Institute invented the first mouse prototype in 1963,[4] with the assistance of his colleague Bill English. They christened the device the mouse as early models had a cord attached to the rear part of the device looking like a tail and generally resembling the common mouse.[5] Engelbart never received any royalties for it, as his patent ran out before it became widely used in personal computers.[6]

The invention of the mouse was just a small part of Engelbart's much larger project, aimed at augmenting human intellect.[7]

The first computer mouse, held by inventor Douglas Engelbart, showing the wheels that make contact with the working surface

Just a few weeks before Engelbart released his demo in 1968, a mouse has already been developed and published by the German company Telefunken. Unlike Engelbart's mouse, the Telefunken model had a ball, as it can be seen in most later models until today. Since 1970 it was shipped as a part and sold together with Telefunken Computers. Some models from the year 1972 are still well preserved.[10]

The second marketed integrated mouse shipped as a part of a computer and intended for personal computer navigation came with the Xerox 8010 Star Information System in 1981. However, the mouse remained relatively obscure until the appearance of the Apple Macintosh, which included an updated version of the original Lisa Mouse. In 1984 PC columnist John C. Dvorak dismissively commented on the newly-released computer with a mouse: "There is no evidence that people want to use these things".[11][12]

[edit] Variants

[edit] Mechanical mice

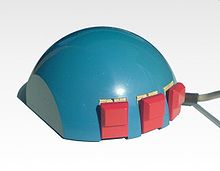

Operating an opto-mechanical mouse. |

Bill English, builder of Engelbart's original mouse,[13] invented the ball mouse in 1972 while working for Xerox PARC.[14]

The ball-mouse replaced the external wheels with a single ball that could rotate in any direction. It came as part of the hardware package of the Xerox Alto computer. Perpendicular chopper wheels housed inside the mouse's body chopped beams of light on the way to light sensors, thus detecting in their turn the motion of the ball. This variant of the mouse resembled an inverted trackball and became the predominant form used with personal computers throughout the 1980s and 1990s. The Xerox PARC group also settled on the modern technique of using both hands to type on a full-size keyboard and grabbing the mouse when required.The ball mouse has two freely rotating rollers. They are located 90 degrees apart. One roller detects the forward–backward motion of the mouse and other the left–right motion. Opposite the two rollers is a third one (white, in the photo, at 45 degrees) that is spring-loaded to push the ball against the other two rollers. Each roller is on the same shaft as an encoder wheel that has slotted edges; the slots interrupt infrared light beams to generate electrical pulses that represent wheel movement. Each wheel's disc, however, has a pair of light beams, located so that a given beam becomes interrupted, or again starts to pass light freely, when the other beam of the pair is about halfway between changes. Simple logic circuits interpret the relative timing to indicate which direction the wheel is rotating. This scheme is sometimes called quadrature encoding of the wheel rotation, as the two optical sensor produce signals that are in approximately quadrature phase. The mouse sends these signals to the computer system via the mouse cable, directly as logic signals in very old mice such as the Xerox mice, and via a data-formatting IC in modern mice. The driver software in the system converts the signals into motion of the mouse cursor along X and Y axes on the screen.

The ball is mostly steel, with a precision spherical rubber surface. The weight of the ball, given an appropriate working surface under the mouse, provides a reliable grip so the mouse's movement is transmitted accurately.

Ball mice and wheel mice were manufactured for Xerox by Jack Hawley, doing business as The Mouse House in Berkeley, California, starting in 1975.[15][16]

Based on another invention by Jack Hawley, proprietor of the Mouse House, Honeywell produced another type of mechanical mouse.[17][18] Instead of a ball, it had two wheels rotating at off axes. Keytronic later produced a similar product.[19]

Modern computer mice took form at the École polytechnique fédérale de Lausanne (EPFL) under the inspiration of Professor Jean-Daniel Nicoud and at the hands of engineer and watchmaker André Guignard.[20] This new design incorporated a single hard rubber mouseball and three buttons, and remained a common design until the mainstream adoption of the scroll-wheel mouse during the 1990s.[21] In 1985, René Sommer added a microprocessor to Nicoud's and Guignard's design.[22] Through this innovation, Sommer is credited with inventing a significant component of the mouse, which made it more "intelligent;"[22] though optical mice from Mouse Systems had incorporated microprocessors by 1984.[23]

Another type of mechanical mouse, the "analog mouse" (now generally regarded as obsolete), uses potentiometers rather than encoder wheels, and is typically designed to be plug-compatible with an analog joystick. The "Color Mouse", originally marketed by Radio Shack for their Color Computer (but also usable on MS-DOS machines equipped with analog joystick ports, provided the software accepted joystick input) was the best-known example.

[edit] Optical and Laser mice

Main article: Optical mouse

Optical mice make use of one or more light-emitting diodes (LEDs) and an imaging array of photodiodes to detect movement relative to the underlying surface, rather than internal moving parts as does a mechanical mouse. A Laser mouse is an optical mouse that uses coherent (Laser) light.[edit] Inertial and gyroscopic mice

Often called "air mice" since they do not require a surface to operate, inertial mice use a tuning fork or other accelerometer (US Patent 4787051) to detect rotary movement for every axis supported. The most common models (manufactured by Logitech and Gyration) work using 2 degrees of rotational freedom and are insensitive to spatial translation. The user requires only small wrist rotations to move the cursor, reducing user fatigue or "gorilla arm". Usually cordless, they often have a switch to deactivate the movement circuitry between use, allowing the user freedom of movement without affecting the cursor position. A patent for an inertial mouse claims that such mice consume less power than optically based mice, and offer increased sensitivity, reduced weight and increased ease-of-use.[24] In combination with a wireless keyboard an inertial mouse can offer alternative ergonomic arrangements which do not require a flat work surface, potentially alleviating some types of repetitive motion injuries related to workstation posture.[edit] 3D mice

Also known as bats,[25] flying mice, or wands,[26] these devices generally function through ultrasound and provide at least three degrees of freedom. Probably the best known example would be 3DConnexion/Logitech's SpaceMouse from the early 1990s.In the late 1990s Kantek introduced the 3D RingMouse. This wireless mouse was worn on a ring around a finger, which enabled the thumb to access three buttons. The mouse was tracked in three dimensions by a base station.[27] Despite a certain appeal, it was finally discontinued because it did not provide sufficient resolution.

A recent consumer 3D pointing device is the Wii Remote. While primarily a motion-sensing device (that is, it can determine its orientation and direction of movement), Wii Remote can also detect its spatial position by comparing the distance and position of the lights from the IR emitter using its integrated IR camera (since the nunchuk accessory lacks a camera, it can only tell its current heading and orientation). The obvious drawback to this approach is that it can only produce spatial coordinates while its camera can see the sensor bar.

A mouse-related controller called the SpaceBall™ [28] has a ball placed above the work surface that can easily be gripped. With spring-loaded centering, it sends both translational as well as angular displacements on all six axes, in both directions for each.

In November 2010 a German Company called Axsotic introduced a new concept of 3D mouse called 3D Spheric Mouse. This new concepts of a true 6 DOF input-device uses a ball to rotate in 3 axes without any limitations.[29]

[edit] Tactile mice

In 2000, Logitech introduced the "tactile mouse", which contained a small actuator that made the mouse vibrate. Such a mouse can augment user-interfaces with haptic feedback, such as giving feedback when crossing a window boundary. To surf by touch requires the user to be able to feel depth or hardness; this ability was realized with the first electrorheological tactile mice[30] but never marketed.[edit] Connectivity and communication protocols

To transmit their input, typical cabled mice use a thin electrical cord terminating in a standard connector, such as RS-232C, PS/2, ADB or USB. Cordless mice instead transmit data via infrared radiation (see IrDA) or radio (including Bluetooth), although many such cordless interfaces are themselves connected through the aforementioned wired serial buses.While the electrical interface and the format of the data transmitted by commonly available mice is currently standardized on USB, in the past it varied between different manufacturers. A bus mouse used a dedicated interface card for connection to an IBM PC or compatible computer.

Mouse use in DOS applications became more common after the introduction of the Microsoft mouse, largely because Microsoft provided an open standard for communication between applications and mouse driver software. Thus, any application written to use the Microsoft standard could use a mouse with a Microsoft compatible driver (even if the mouse hardware itself was incompatible with Microsoft's). An interesting footnote is that the Microsoft driver standard communicates mouse movements in standard units called "mickeys".

[edit] Serial interface and protocol